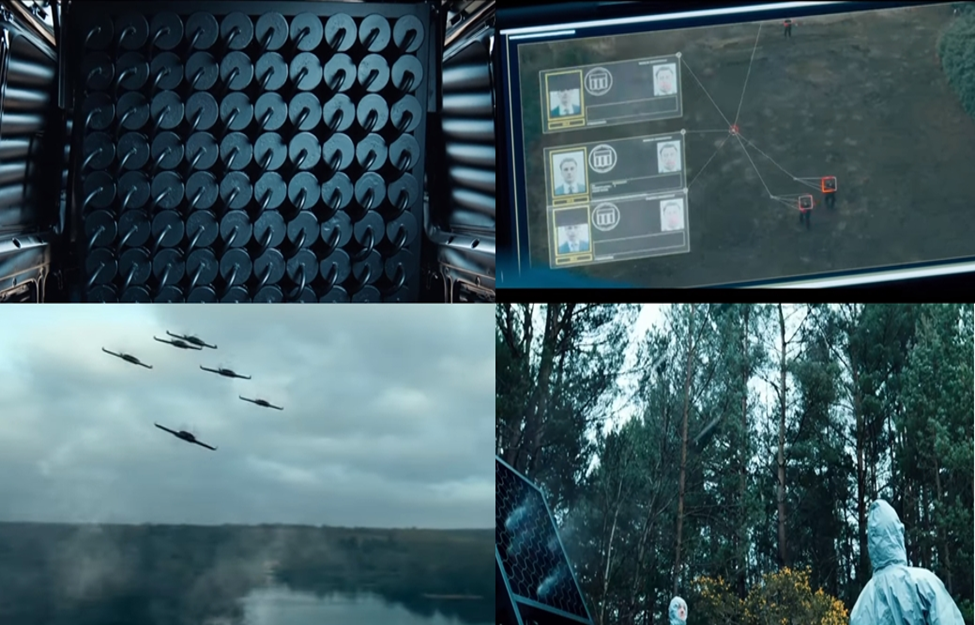

During the ongoing war between Russia and Ukraine, there are photographs showing what appears to be KUB-BLA, a type of Russian “Suicide” drone that boast the ability of self-flying and facial recognizing the target by artificial intelligence [1]. It raises concern about the possibility that AI will play a greater role in making lethal decisions and how autonomous drone might alter war’s course.

Self-flying drone

Self-navigation drones get pre-defined GPS coordinates about departure and destination points, with the capability to find the most optimal way and get there without manual control thanks to AI-enabled computer vision advances. Various in-built elements of a self-flying drone include computerized programming, propulsion and navigation systems, GPS, sensors and cameras, programmable controllers, as well as equipment to automate the flight [2].

Computer Vision

Computer vision has a primary role in detecting the various types of objects to analyse and record information on the ground while flying in midair [3]. Onboard image processing and a drone neural network are used to detect, classify, and track objects while the drone travels. The neural network in drones is capable of detecting various types of objects like vehicles, buildings, trees, objects on or near the surface of the water, as well as diverse terrain. Additionally, it can identify living creatures with high degrees of accuracy. Using computer vision, the drone maps out its surroundings in 3D to support its maneuvering and avoiding collisions with obstacles.

Deep Machine Learning in Object Detection & Drone Navigation

Despite GPS navigation and computer vision, it is not enough to solve the problem of collision avoidance. To make the drone learn how to avoid objects at high speeds and recognize a variety of objects, from static to in motion, deep learning algorithms must be used to train it with a large amount of data. A wide variety of entities are labeled to make sure drone can detect and decide its direction and control to fly safely avoiding the obstacles in the path.

How devastate is “Suicide” drone?

According to the producing company ZALA Aero, a subsidiary of the Russian arm company Kalashnikov, each KUB-BLA is equipped with 3-kilo explosive that would detonate when the drone drops into the target. The blast is powerful enough to obliterate human body or destroy a vehicle.

The drone’s computer vision uses an intelligent facial recognition to identify the target midair, while being trained with machine learning algorithm to dodge incoming attack and calculate the optimal course. The drone is highly effective in detecting and chasing the target to ensure a positive hit.

Capable of reaching highest speed of 130 km/hr for 30 minutes after launch, it is almost impossible for a ground target to escape. It is unknown if KUB-BLA can be used in a drone swarm attack.

Reference

[1] https://www.wired.com/story/ai-drones-russia-ukraine/

[3] https://www.wevolver.com/article/artificial-intelligence-in-drone-technology/

Very interesting article! These things always stir up many kinds of emotions for me. It’s super interesting to see where AI is heading and how accurate it’s getting, but it’s also absolutely terrifying. I can’t help but remember that one episode of Black Mirror titled “Hated in the Nation” where killer drones the size of bees start killing very specific people with deadly accuracy. Honestly, by the sounds of things, it doesn’t seem like we are that far off from miniature killer, autonomous drones. However, I’m not surprised this is where things are going. Having very little human effort for maximum output is kind of what we as a human race are constantly striving for. So having drones that can do all the work for us without us even lifting a finger makes total sense.

Great post! It’s scary to think about how powerful an army of drones can be. In the invasion of Ukraine we can clearly see the mismatch in technology of the Ukrainian forces compared to the Soviet-era military of the Russians. If Nato supplies Ukraine with more high-tech gear powered by AI (like Drones) we could see the true power of a modern military.

The existence of these kamikaze drones are quite scary. It appears that with every passing day we stray closer and closer to autonomous warfare, where patriotism and willpower and no longer relevant in the equation of warfare. Where war is decided by who has the biggest wallet and the fanciest guns. Here’s to hoping we’re still years away from such a dystopian imperialistic world.

The only good thing about drones, in my opinion, is how easy they are to shoot down. At the moment, it seems these are being used more to terrorize civilians than push back against an opposing force. I agree that there are many reasons for concern. Thank you for your informative article covering their capabilities.

I saw an interview of Elon Musk where he had mentioned how powerful can drones become and how their unique ability can be used to assassinate or cause mass destruction. He said that many years before this war between Ukraine and Russia. Even though drones are so useful to take aerial videos, which previously had to be undertaken using helicopters and unaffordable equipments. Moreover, I learnt from somewhere that you can use small drones to deliver food just like door-dash, ubereats and these use of technology to solve such problem was really fascinating. However, now when I learn that drones are more than that and can be used by any organization for terrorism, I think the use of drones should be more regulated and licensed so that it can only be used for good and ethical purposes. Even if drone use is regulated, the use of drones to fight in war ca not be stopped. It can give a huge competitive advantage to the nation using drones as a means to win a battle or even a war like Ukraine-Russia.

This is a very good post. With the development of drone technology more and more people are owning drones. But unfortunately, there are not very robust laws regarding drones. In some places, people even use their own drones to fly around airports with the purpose of forcing the aircraft to stop. This behavior is extremely dangerous, but there is no good way to solve this problem. Although drones were invented to allow people to see buildings from different angles, they have characteristics that make them a horrible war machine with only a few modifications. Therefore, governments should strengthen the management of drones and not wait for tragedies to happen before regretting them.

It’s very interesting to see how technology and more importantly, AI is developing at such a fast pace, The ability to use facial-recognition while maneuvering in the air and doing so while avoiding any and all kinds of terrain with extreme precision is fascinating to see. But at the same time, its also very worrying to see the direction its going, I would not be surprised if AI has a much bigger role in modern warfare in a few years to come. Great blog post!

Very interesting and frightening article, it is always cool learning about technological advances but it is always a double edged sword. Artificial intelligence can both be of great value and great harm as mentioned by the missiles in this article. It is worrying to see how much reliance their is on technology in warfare especially now with A.I being able to dodge and maneuver anti-defence mechanism to secure the hit on the lock on target. AI will most likely play a vital role in future ware fares and with is a lot more complications will arise. Thank you for highlighting this news. Once again great read!

An interesting article for sure. I heard about similar tactics being used before any many people having issues with drones as they would would cause a lot of civilian casualties. Seeing A.I facial recognition combined with drones will likely reduce these previous criticisms but it’s also scary to see how effective machine learning is for war. Being able to recognize and dodge attacks while going at 130km/hr is quite impressive.

I wonder if there is a scientific discovery that has not translated into the worst humanitarian disaster. I am sure machine learning was invested to reduce the number of work humans can do. By using machine learning, one could pass many tasks to a computer to do whereas the human can focus on important problems. However, with advancements in machine learning, many are realizing the effectiveness machine learning could post on the battlefield. I think the Ukraine-Russia conflict is just the beginning of AI warfare. Many countries are watching its effectiveness and I would not be surprised if the next great war will be found purely with machines.

I found this article very interesting. I think AI technology is amazing, but it is such a double-edged sword. It is capable of doing so many great things, automating processes and making great technological advances, but when placed in the hands of a malicious entity, can be absolutely devastating to mankind. Though it is unfortunate that it is the case here, using autonomous drones to kill people, the technology is still impressive. I do wonder what precedent this sets for, god forbid, future warfare, but unfortunately this is only the beginning of combative AI.

Very well written and interesting, though it makes me so sad to read. These drones are a technological wonder, and the fact that they are used almost exclusively in war makes me so sad and disgusted. This quote that you wrote really stuck out to me:

“Capable of reaching highest speed of 130 km/hr for 30 minutes after launch, it is almost impossible for a ground target to escape.”

That really puts into perspective how much these drones have changed modern warfare, and how terrifying it would be to be against these drones. It reminds me of the story of the 13 year old pakistani boy who talked about how he no longer loved blue skies, because drones don’t fly on cloudy days (1).

1. https://www.theatlantic.com/politics/archive/2013/10/saddest-words-congresss-briefing-drone-strikes/354548/

Very interesting article! Really goes to show just how far AI has come, though unfortunately in a poor direction? As programmers and coders, what we do can have incredible implications in the world as it moves forwards, this is something we MUST be aware of. I think this is an incredible example of that. Obviously facial recognition in this capacity is incredible technologically, but what about the consequences it is obviously coming with. As someone above said, they were reminded of the Black Mirror episode “Hated in the Nation”, and I can’t help but agree. It’s an astute observation and maybe even a hint at what we are progressing towards (though I hope not).

At the end of the day, I am all for the progression of AI and science as a whole, I just hope not to see it used to commit heinous acts. Unfortunately, that seems to be exactly what is happening here (and in other places around the world).

Hi,

It is scary how these days techonologies are getting used in the war as cyberwar. I’ve been always amazed of what AI technologies can do but this incident really got me thinking! It can be so convenient but terrifying at the same time. I feel like few years later, AI will be more involved in our lives today and will have a huge impact in our lives.

I find this really interesting, especially when considering false positives. Surely these drones are not correct 100% of the time, and thus they can potentially kill friendly soldiers or civilians. The more interesting thing about this is the percentage of “correct” kills, compared to the total amount of “incorrect” kills. For example, if it is correct 95% of the time, the leftover 5% is seemingly insignificant. However, if you were to have the necessary data at your finger tips, this would evaluate to a (potentially) large number of incorrect kills.

Great post by the way!

It’s interesting to see drone technology as a prospect for modern warfare. Machine and technology acquisition for military purposes could lead to more strategic forms of warfare that lessen the risk of friendly casualties during missions. Not only can these technologies be used for combat but also for special operations surveillance missions and reconnaissance. Just as you have outlined in your article, drones can use computer vision and object detection which can also be utilized in order to get a better understanding of the battlefield conditions.

Though drones lend significant utility in the aspect of combat, I believe they serve a better purpose in helping civilians caught in the crossfire of these devastating attacks. For example, drones can be beneficial in search and rescue missions by facilitating quicker emergency response and also searching for survivors by surveying destroyed infrastructure. Furthermore, they can also serve as a means of transport for medical supplies to these disaster sites.

Drones are definitely one of those things that have great ramifications to them, such as invasion of privacy and the ability to initiate acts of violence. It’s scary to see what kind of power they possess with advancements in computer vision to detect objects, people, faces, etc. As drones continue to be used for not just military purposes, but as tools in our society to server purposes like delivery or surveillance, I believe the ramifications will only continue to grow in an oddly dystopian manner. Thank you for the informative post.

One thing to note is that even next-generation fighter jets will have the capability to operate autonomously. It’s great to see all of this technological innovation but I don’t think legislation is catching up to the pace of innovation and how would autonomous hardware be held accountable for any “wrong” decision made in error? For example, lets say an innocent human was killed in a conflict zone due to a “bug” in it’s programming, would the military take responsibility? The developer? Any military equipment with AI can lead to a slippery slope of multiple sides denying accountability in the event of an unintended consequence.

One of the things I found interesting about this blog is that drones can learn on their own. The algorithm in the drone can mark and store the obstacles scanned during flight. When encountering such marked data in the future flight, it can quickly dodge while moving at high speed. I know that drones have a similar function, which is to store and transmit scanned terrain to a computer to generate images. Also, this is the first time I learned about drones being used in warfare.

An interesting blog post! AI and drones have been steadily making their way into the battlefield and producing tactical and strategic advantages for the host country. We first saw their use during the ‘War n Terror’, but only recently have we seen their usage in inter-state conflicts. Other than the Russo-Ukrainian War, the Armenia-Azerbaijani War of 2020 saw Azerbaijan deploy drones on a huge scale to devastate Armenia’s armed forces. This brought a strategic victory for Azerbaijan at a little cost. Now, we are seeing them enter the battlefield again in Eastern Europe. These make killing one’s targets less costly in manpower while allowing a fatal blow to be done to the enemy. With the world watching, it is interesting how high-ranking military officials and politicians will try to learn from this conflict and adapt their own military doctrines for future conflicts. As it stands, the massive use of armoured vehicles, tanks, and aircraft are making drones a viable and cheaper way to deal critical blows to each side of the conflict.

The technology used for these drones is quite cool and innovative, but it is sad that they are using it to kill people, instead of use it for something more productive like delivery, construction or something. I also think that these AI drones are not ethical, because if something goes wrong, like a bug in the program, it could lead to serious harm to civilians or even other comrades and no one really has to take responsibility for it because a computer did it not a human.

Very interesting post on the capabilities of technology and drones in particular now in modern warfare. It makes you wonder about what else drones will be capable of in the future even related to non warfare capabilities. Its also scary to think about any errors or malfunctions in these drones and their programming especially when it comes to the facial recognition and the damage that could lead to.

Hey, that was a fantastic post. It’s amazing to see where AI is headed and how accurate it is becoming, but we’re also hearing instances of people utilizing it to cause havoc in our society. Our human tendency is to build things that are practically hard to make, yet this is a double-edged sword. As the missiles in this article point out, artificial intelligence can be both beneficial and harmful. I strongly believe that in future, AI will almost certainly play a significant part in future warfare, which will basically create more problems in our society.

The ramifications for these drones can be horrific, say, if some organization found a way to access the software, network, or communications between the drone and the system communicating with it, criminals could have access to incredibly lethal weapons; capable of taking out anyone they like. If there’s anything that can be said about the power of networks and computers, it’s that your own assets can be used against you just as quickly as they can be used for you. Hopefully, for the civilians sake and the people who developed these drones, they come with state of the art cybersecurity. I personally don’t want any hacked/rogue explosive drones coming my way.

Great post, amazing to see where technology has taken us, but also terrifying to see what it is capable of. Its hard to root for the continual advancement of technology in the name of a “better world’, when that same technology can be used to destroy us. It’s disappointing that with such breakthroughs in AI and machine learning, some choose to use this technology to aid them in war and destruction, rather than putting our societal efforts towards the advancements in say the the medical research field. As far as these drones go, I hope that their internal computer security systems are up to par, as hacking drones could turn very badly.

This is frightening to see this and then think about what AI may be capable of and used for in the future. I must admit though that learning about how the AI can navigate and avoid collisions is quite fascinating. For me, I think that the idea that someone can use a small drone and deal a devastating amount of damage enough to blow up a car from some remote location was the biggest fear factor here. Plus, unlike nuclear weaponry, it seems that drones would also just be a lot more accessible to a wider range of people in terms of obtaining or creating one. Overall though, I am glad that this was brought to my attention via such an informative post and whether for better or for worse, seeing the applicability of certain technology is always interesting.

That was quite a read! To think, we came from real-life kamikaze pilots during WW2 to kamikaze drones in a span of almost 100 years. These KUB-BLA drones seem extremely dangerous, especially given that it is being used by an aggressive world power such as Russia. Although I myself am fascinated by the concept of machine learning and AI, their use in warfare only dispenses fear into the hearts of regular people. I have heard a lot about how the research done by the military is highly advanced and we, the general public can barely get a glimpse of this futuristic technology.

The fact that militaries go above and beyond to create such killing machines saddens me as I think that such brainpower could be used for the betterment of society in different ways. I have always wondered, will we ever reach a point where we will not even need real human soldiers, the power of AI and robotics might make the use of human souls on the battlefield obsolete. But all in all, very informative post there Chloe.

Very interesting to see in how many different areas AI is being used, this application sounds very similar to self-driving cars but is an application I had not thought of (and would prefer for it not to be existent). While on a technical level this poses some very interesting questions, and the solution to those can be used in many very positive and beneficial ways, this is certainly not one of them. If anything, it shows how all technical or scientific advancements can be used for malicious causes, even if the inventor did not plan on such applications to be used, or did not even consider the possibilities of malicious applications.

Interesting post. I have only ever heard of human/soldier controlled drones used for warfare, and didn’t realize that AI and drone technology has advanced to this degree. I think that the use of AI technology in conjunction with drones raises many ethical implications especially with the serious amount of damage that they can cause and the war that is going on. There can be a lot of bias in AI code that can alter the way drones perceive the world around them so it is important that governments prioritize the creation of regulations and legislature to control how they can be used and prevent misuse by private or malicious entities in the future. Additionally, if mistakes or incorrect targeting occurs, it can be difficult to assign blame. There is no soldier behind the trigger, so should the team that built the AI be held accountable? What about the government that approved the program? I cannot answer these questions with certainty, but they will undoubtedly be raised in the future as drone technology improves.

Great article! Makes me wonder if they have any security measures in place to protect bystanders. Since the payload seems fairly small relatively speaking, it makes me think about how one could possibly thwart the facial recognition of the KUB-BLA drones.

At odds with the cliched trolley problem analogy that’s often invoked during any discussion about autonomous AI, I’m actually in favour of AI’s increasing prevalence in human affairs – even in the extreme case of warfare. In recent years, AI have become more proficient than humans at certain complex activities. The best example is with autonomous vehicles. It’s been shown that autonomous vehicles are generally less prone to making errors than humans. When they do get into accidents, it’s almost always the fault of other vehicles involved. If AI can be improved to the point where they demonstrably outperform humans in risky tasks, shouldn’t they be allowed to do so? I’m not trying to be glib here, I understand that AI have their own biases and faults. But those biases are often inherited from their creators and can be much more easily ironed out than human biases, for which there are no debuggers. If military AI can reliably distinguish between friend and foe, and act in a manner that minimizes loss of life and collateral damage with a more surgical precision than humans are capable of, then I think it would be more unethical to not seriously consider them.

Developing anti drone technology might need to be fast tracked. As this technology develops further, we could get the dystopian futures we see in movies.

Astonishing, these days people can launch an attack without even moving a single finger, the same thing happened in Iran, a nuclear scientist was killed by using an artificial intelligence based gun that had his facial recognition, as soon as he passed with his car on the road, he was murdered, so these days attacks happen anywhere, anytime, hence being careful.

In recent years, AI has grown to play a central role in intelligent warfare, with the intent of targeting and destroying critical elements of opponent operational systems. In my opinion, AI is the third revolution in warfare, after gunpowder and nuclear weapons. As opposed to previous, physical-only eras of war, this and subsequent generations will see the rise and increasing use of AI weapons. Although AI has the potential to transform technological capabilities and refine the notion of war, its continued growth can be equally devastating for the world. AI weapons such as the drones described in this article, will only become even more intelligent, precise, faster and cheaper. Furthermore, they will learn more skills, and if they are weaponized by corrupt nations with malicious personal agenda’s, it is highly likely that AI-fuelled warfare will only be used to destroy the world. AI-weaponry is plagued by a number of ethical and moral issues, which makes it difficult to easily accept and envision a world in which such weapons are the norm, as opposed to the exception. Much like nuclear weapons, autonomous weapons are also considered to be an existential threat, if left unchecked. Although AI has the benefits to improve warfare as we understand it, it has equal potential to exacerbate global-scale conflicts. We must ask ourselves how much we trust humanity to use such technology for the right reasons.

The topic of Artificial Intelligence always gets me hooked. The importance of AI has increased significantly over the past few years. No matter how much intriguing the technology is, there always lies the threat of using it as a threat to life. Overall, great post!

This is an excellent article! It’s terrifying to consider how strong a drone army may be. The mismatch in technology between the Ukrainian troops and the Russian military from the Soviet period was plainly seen during the invasion of Ukraine. The existence of these kamikaze drones is really frightening. With each passing day, it looks that we are edging closer to autonomous conflict, in which patriotism and willpower are no longer significant in the equation of battle. We might witness the actual potential of a contemporary military if NATO gives Ukraine with additional high-tech equipment driven by AI (such as Drones).

Best Information! What I learned from your post is that the goal of AI is to provide software that can reason on input and explain on output. AI will provide human-like interactions with software and offer decision support for specific tasks, but it’s not a replacement for humans – and won’t be anytime soon.

This was an interesting post to read! I’m not sure how to feel about drones running on AI. On one hand, I find the technology to be absolutely fascinating; teaching AI collision avoidance through deep machine learning is a really cool application that I’m excited to see develop. On the other hand though, it is quite terrifying knowing how this type of technology could be used in warfare – especially the idea of a “drone swarm attack” that you mentioned.

This was a really interesting post! With the increase in popularity and accessibility in drones knowing that these types of attacks are possible is very scary to think about. Obtaining the software involved with the facial recognition could allow any person to potentially make their own suicide drones. As AI technology improves there will always be an impressive side to it as well as a frightening side. Hopefully, physical security professionals can find a way to best deter these types of attacks and protect those that may be targets.

There is no doubt that human beings are always good at applying the most advanced technologies to destroy ourselves. The development of drone technology is attributed to the development of multiple industries. Hopefully, they can be utilized in a more peaceful way instead of making drone become a missile.

Very good post, this was interesting to read about! I could have never predicted drones to become so advanced, which is scary as technology can always improve at any time. The more advanced technology gets, in the wrong hands, it could achieve some devastating events.

This post hints at an interesting question that I would like to know more about: what are the ethical issues with using AI to make life-or-death decisions. I think it hinges on a couple of things. First, how predictable/”rational” would the AI be in making its decisions. If it works very consistently, then it could be argued to perfectly reflect the considered judgment of the authority that makes use of it. It would be little different than employing a human being to do the same work (which would obviously be less efficient). But even if there is a high rate of error/noise in the AI’s activity, the same can be said for human decision makers. Perhaps if the AI does not fare any worse than a human being, then the spooky ethical problem with using AI to make these decisions goes away.

This is an ugly side of development in computer science, and speaks to the real ethical quandaries regarding automation. When removing humans from the equation entirely, context and empathy can be removed entirely to facilitate any task. Others have pointed out the negative consequences of this AI failing, but I think its also important to consider the consequences of it working as intended; the drone will complete the task it is intended to complete in a way that a human soldier may not. There are things in a war that humans may not be willing to do, but as AI develops it may reach a point that this is no longer an obstacle.

That being said, there’s a lot about these drones specifically that was left unsaid. I would be curious to see if any safeguard measures were taken during the process of designing this drone (at least you didn’t mention any, which makes me think that anybody it comes in contact with is at risk), and which priorities it is meant to have. Would a child throwing a rock at the drone cause it to attack? Does it preferentially seek out armed individuals over the unarmed? Is there any way that it can be manually controlled or recalled? I struggle to believe none of these measures are in place (it would be really scary if they weren’t), though its still a concerning step as far as future AI involvement in warfare goes.

Interesting blog! I have previously watched the movie “Angel has fallen” and I found this drone attack scene very interesting. I did not know before that this was an actual thing until now. Your blog explains the science behind an AI drone and I am honestly intrigued. This shows how modern algorithms like the deep learning algorithm can be used to spread violence around the world. However, in my opinion these algorithms are not 100% perfect. They will always have bugs and might cause the drone to malfunction. Which may result in an incorrect hit or a missed hit. An innocent life can also be taken in the process. I think AI drones are dangerous and they should not be out there in the first place.

Great post! I didn’t know that drones were so dangerous until I read this post. It’s pretty scary to know that drones can be equipped with dangerous weaponry that makes them deadly which only results in harm. Technology continues to evolve and it can be used incorrectly.

Very interesting post! It is very interesting to see where modern technology and IA is heading. I don’t know if it is fascinating or terrifying. How easy it is getting nowadays, the drone will do all the work without you even making an effort. You have explained the science behind this drone which is very intriguing, but it is genuinely terrifying how this type of technology could be used in modern warfare, especially the idea of a “drone swarm attack”.

Seeing how modern technological and computational discoveries are being used for such destructive purposes is terrifying. AI is incredible technology, but to use it in such a way is just horrible to think about. To be optimistic, though (if this could be considered optimistic), it is possible that the same technology could be used to prevent these AI drones from successfully executing their attacks.

Thanks for sharing such an informative blog post! With the constant growth of technology, it is scary how powerful and destructive they can be in the wrong hands. I was unaware that drones can be manipulated to perform such harm. The newest AI technologies can always be used two ways, one to protect, and one to do damage. I think we need proper laws, and policies in place so things mentioned in this post cannot be executed.

When new technology comes along, it will always be used first in the military. The use of drones in warfare does seem to be highly effective, reducing the number of casualties among combatants. But as with biological, or chemical weapons, is it ethical to use drones in warfare?

This technology is definitely one of the darker applications of artificial intelligence. It presents a very real moral dilemma that humanity faces going forwards technologically. As stated in the article, this drone is the just precursor to advanced weaponry, and what is achievable with today’s technology. It makes me fear for what war could look like decades from now. Very interesting read!

Super interesting post! There’s actually an interesting short film called “Slaughterbots” that covers essentially this exact topic: https://www.youtube.com/watch?v=9fa9lVwHHqg. Furthermore, the TV series “Black Mirror” also handled this topic, albeit in a bit of a tangential way.

There have been reports of US suicide drones taking out Russian tanks, and so the question that perhaps should be asked is whether the use of these drones is okay if limited only to hardware, or if we should open up their use against people as well.

Good Post! It is very informative, concise, and rather scary. There are a lot of comments here already so apologies if some of my observations have already been said. Some of the facts you’ve mentioned regarding the capabilities of this technology paint a terrifying picture. Particularly the speed that these drones can achieve. Imagine going about your business when suddenly a swarm of drones flying at 130 km/hr all barrel toward you around a corner and explode. It doesn’t leave much time to react, much less time to put up a fight. The speed and navigational abilities that these drones exhibit combined with a potential suicide bomb functionality seem to be an extremely powerful and frightening military devleopment.

Wow these drones are pretty scary. Artificial intelligence is useful however, sometimes it can have dangerous applications like these. The suicide drone in particular sounds very dangerous. In my opinion, the powerful algorithms it is equipped with are more dangerous than the actual explosion itself.

A very informative post! Drones certainly pose significant threats during wars as they can maneuver through opposition territories effectively without being noticed and used for a wide range of purposes from arms delivery to gathering intels. As technology improves to accommodate high accuracy and control, weaponizing them can cause fatal destruction. As technology can always be learned and networks can be hacked, the cause of concern is even more as their own weapons can be turned against them. Cyberwarfare has never been more prevalent until now and digital threats are only going to increase.

130km/h?! I’m conflicted. On one hand, it’s nice to see AI tech advancing as much as it has recently. But is it advancing for the right purposes? A lot of tech nowadays hardly seems worth the risk. Imagine one day you were just talking down the street and 1 line of data received by these AI drones ended your existence. Now that’s a scary thought. It is interesting however that the drones are able to pretty much have a “Virtual” layout of surrounding areas as well as their capability to automate flight for thirty minutes without any assistance afterwards… There could be many good uses for these excluding war in the future. These drones are basically real life cheat codes.

Technological advancements can make human life better in so many aspects, but the capabilities of technologies can also be effective tools for harming people. Reading this post, I can’t help but be frightened about the role AI can play in modern warfare, and how national defences of countries around the world would take from the Russia-Ukraine invasion.

This is an interesting subject! With the advancement of drone technology, an increasing number of individuals are purchasing drones. People in certain locations even fly their own drones around airports. This habit is exceedingly hazardous, yet there is no effective method to address it. Drones are a horrific war machine that can be made worse with a few tweaks.

This is horrifying. I wonder if the individuals who pioneered the technology that makes this possible ever considered the possibility that their work would lead to anything like this. Perhaps it is a natural consequence for any technological innovation that it will inevitably contribute to the advancement of warfare. This level of detachment from humanity – autonomous drones with the sole objective of maximizing devastation – makes me fear for the future.

As fascinating as the technology is this is horrifying. I completely understand technological development but what I don’t understand is advancement areas such as these. I understand we don’t live in a utopia but its also not a complete dystopia where this level of murderous machine are required I’d say. Th level of power that this gives is insane, in my opinion creating machines such as these should be a war crime all together as it proves intent to use therefore eradicating any need in the first place. It seems countries often forget the atrocities that took place in the previous world wars.

Interesting posts. The innovation of drones is novel. Drones can automatically fly and accurately identify air targets at high altitudes. The usefulness of carrying explosives undoubtedly shows that it is a weapon with great lethality. I knew for the first time that drones could still be used in war. The weapon of artificial intelligence makes us aware of the progress of modern technology. I think relevant institutions should manage and control the use of drones. It will be better used in medical treatment and rescue than using drones in the war. Drones technology should be used to help people, so as to show the higher value of this technology.