Introduction

Reading news online has become more common in Bangladesh, displacing the traditional technique of staying up to date with current events by reading printed copies of newspapers sent to one’s doorstep every morning. As a result of this fact, the number of online news portals has significantly increased as they adapt to the modern approach of maximizing profit, the potential of which has been highlighted rapidly. Even well-known and well-known newspaper companies in Bangladesh, on the other hand, utilize misleading and fraudulent headlines to attract traffic to their websites. In 2015, millions of Bangladeshis shared a news story on a popular online news portal alerting them that Abdur Razzak, their favorite actor, had died. Nonetheless, a small number of people may have picked up on the fact that the actor is dead in the latest film he was working on, rather than in real life [5]. People’s panic lasted weeks, but the practice of publishing satire and fake news with an impressive methodology of making the news appear true in order to boost website traffic skyrocketed at times. Even though Bangladesh publishes over 1000 newspapers online every day, the inundation of false and satirical news has made finding real news challenging. The fake and satirical news portals are often found to be filled with malicious intentions—having both increasing the number of visitors and stealing the visitor’s personal data to sell to third-party organizations.

Over the years, Natural Language Processing, a branch of Artificial Intelligence, has played an increasingly important role in comprehending written languages and delivering on-demand, useful solutions to respective problem domains, particularly in the detection of fake news. While using machine learning techniques to detect fake and satirical news in English is admirable, there has been minimal success in Bangla news. A recent study developed a Deep Neural Network architecture termed BNnet (Imran et al., 2020) to predict Bangla fake news demonstrating substantial performance, in line with the development necessary in Bangla news. However, there is still potential for improvement when it comes to detecting fake news using various deep learning architectures. We contribute to the following in this project:

- Propose a novel methodology for Bangla fake news detection

- Demonstrate a promising and improved detection performance of Bangla fake news by providing a comparative performance analysis among the different classifiers used

We used both embedding-based and transformer-based models to achieve our goal, with a strong emphasis on BERT Bangla [1]. We used a total of five evaluation metrics to assess the models: Accuracy, Precision, Recall, F1 Score, and Area Under the ROC Curve (AUC).

Dataset we used

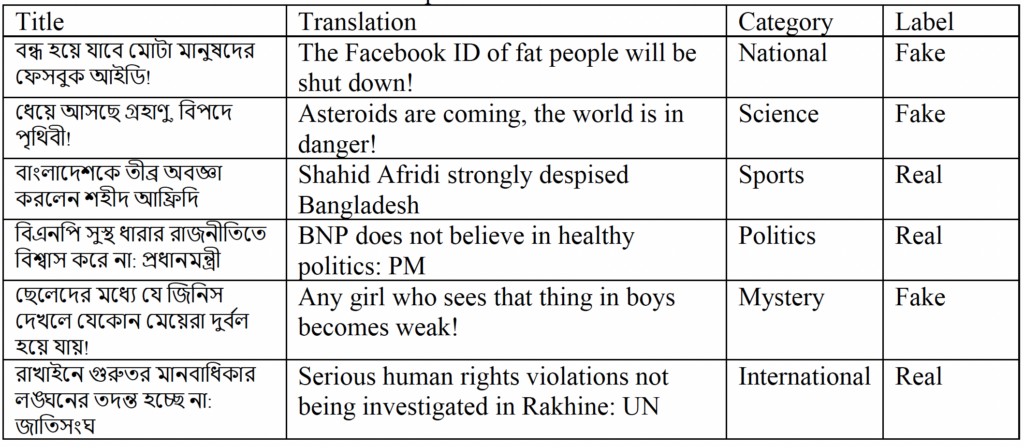

Data was gathered from numerous Bangla news portals via web scraping. We started with a list of 43 Bangla news portal websites, 25 of which are legitimate, reputable, and verified news portals, and 18 of which are satirical and fake news portals. A set of sample Bangla news is provided below:

Experimentation

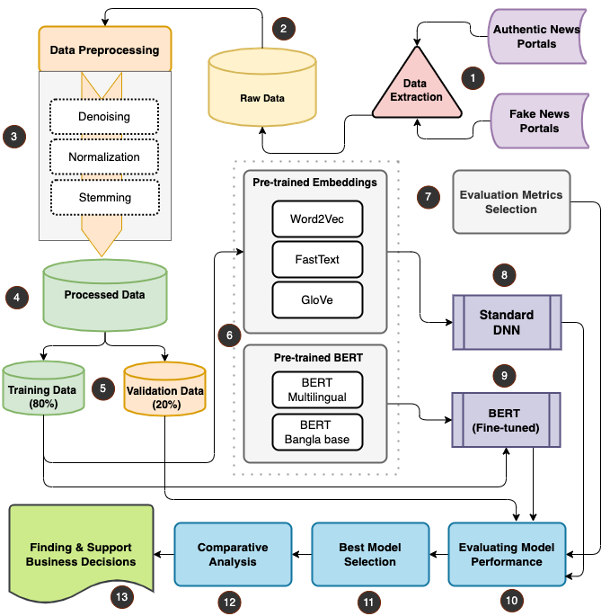

Our experiment is split into two parts: one uses embedding-based models such as word2vec [8], fastText [7], and GloVe [6], and the other uses BERT [9], a transformer-based model. Both of these steps have two sub-steps that must be completed for the experiment to be completed. The following figure 2 shows the proposed methodology of this experiment.

At first, we have collected and extracted the data from various authentic and fake news portal of Bangladesh. Next, we have preprocessed data using Denoising, Normalization, and Stemming techniques to be able to use the data for training the machine learning models. Keeping the training and testing ratio as 80:20, we further then applied both pre-trained embedding algorithms and pre-trained BERT algorithms to detect the Bangla fake news. After implementing the system, we finally perform an analysis of the performance.

Results

Our results demonstrate that transformer based (BERT models) performs better than embedding based algorithms in detection of Bangla fake news. As we have used both Multilingual based BERT and Bangla based BERT, we have found that the Bangla based BERT performs better than multilingual BERT. The multilingual based BERT model provides an accuracy score of 87% for Bangla fake news detection. However, the Bangla BERT based model produces an accuracy score of 91% while the Area Under Curve (AUC) score of 98%. Lastly, based on all the analysis, evaluation metrics, and performance, it can be said that the newly proposed BNnetXtreme demonstrated a significantly improved performance in detecting Bangla fake news, and the BERT Bangla base classifier is found to be the best.

Conclusion

The citizens of Bangladesh witness fake and misleading news as they browse the internet every day. Recently, numerous incidents took place that made people victims of identity theft, losing money due to investing in fake endorsed businesses, becoming participants of communal violence, etc. Thus, fake news in the context of Bangladesh is significantly important to take into consideration for advanced research and investigation to give users a safe space in online news portals that might potentially be misleading, fake, or malicious. Furthermore, to provide the policymakers of Bangladesh to control and monitor the online space in line with mitigating the public risk and influence led by fake and misleading news. In this project, we have thus studied and experimented with a proposed methodology that can detect Bangla fake news in order to contribute to the community that is fighting fake news every day.

References

[1] Sagor Sarker, 2020, BanglaBERT: Bengali Mask Language Model for Bengali Language Understanding, URL {https://github.com/sagorbrur/bangla-bert}, accessed on Feb 10, 2021.

[2] Hossain, E., Kaysar, N., Joy, J. U., Md, A. Z., Rahman, M., & Rahman, W. (2022). A Study Towards Bangla Fake News Detection Using Machine Learning and Deep Learning. In Sentimental Analysis and Deep Learning (pp. 79-95). Springer, Singapore.

[3] George, M. Z. H., Hossain, N., Bhuiyan, M. R., Masum, A. K. M., & Abujar, S. (2021, July). Bangla Fake News Detection Based On Multichannel Combined CNN-LSTM. In 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT) (pp. 1-5). IEEE.

[4] Adib, Q. A. R., Mehedi, M. H. K., Sakib, M. S., Patwary, K. K., Hossain, M. S., & Rasel, A. A. (2021, October). A Deep Hybrid Learning Approach to Detect Bangla Fake News. In 2021 5th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT) (pp. 442-447). IEEE.

[5] Al Imran, A., Wahid, Z., & Ahmed, T. (2020, December). BNnet: A Deep Neural Network for the Identification of Satire and Fake Bangla News. In the International Conference on Computational Data and Social Networks (pp. 464-475). Springer, Cham.

[6] Pennington, J., Socher, R., & Manning, C. D. (2014, October). Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) (pp. 1532-1543).

[7] Joulin, A., Grave, E., Bojanowski, P., Douze, M., Jégou, H., & Mikolov, T. (2016). Fasttext. zip: Compressing text classification models. arXiv preprint arXiv:1612.03651.

[8] Church, Kenneth Ward. “Word2Vec.” Natural Language Engineering 23.1 (2017): 155-162.

[9] Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805.

When programming AI or a Machine Learning algorithm, it’s extremely important to make sure that the program is detecting and identifying the information you expect it to (and I feel that this is especially important when it comes to AI applied in a sense such as this). It’s not uncommon for an algorithm to be given a dataset and learn to properly identify and make distinctions between properties of the data that were not intended to be taken into account. One example of this could be a program that’s made in an attempt to detect a disease (e.g. cancer), but given test samples and data sets, learns to identify children from the data set as opposed to those actually suffering from the disease. If an AI is being used to detect misinformation, it HAS to be extremely accurate.

I’m glad that there are new ways to combat fake news IIRC there was a bot in Ukraine that was created in to detect fake new, as there is a lot of disinformation in Ukraine as a result of the conflict as Russian bot farms are trying to control a narrative. I liked how you included a graphic of how the ML works. I wonder how this bot would fair in a western country however, as I wonder if it would detect Bia, as the sources you indicated are on the ends of each spectrum, legitimate new and fake news. But would it still perform well even if the news is bias and legitimate but with a caveat of misleading information?

I think you brought up a very interesting point. The fine line between what is real and what is fake. I would recommend you read a book by Ryan Holiday on “Trust me I am lying”. The book is about individuals who spread fake news to small media which ends up being picked up by big media such as CNN or BBC before making international headlines. I would love to know the inner workings of the machine learning software so I can better understand how it is supervising the news good and bad. Since we don’t really understand how machine learning works, we can never be sure of the output generated by the software.

I had the same thought! Machine learning algorithms can only expand the way they were designed to, while humans are adaptable in almost every way. I have always seen AI as something that is easy to exploit by testing every front and seeing where the lines are drawn. If these fake news companies get too smart, it may become very difficult for not just the AI, but even human moderators to detect the difference between fake news and real news. But, that begs the question, if AI aren’t the answer to stopping fake news then what could do a better job?

Great post! It is interesting to see other countries follow how other countries adapted to online news sources rather than traditional new sources. As you mentioned, unfortunately this leads to companies being able to mislead audiences as well as being able to profit off the information they gathered from the visitors to these websites. I really found your model interesting to test the different portals as this was a cool self experiment that you added from what you found online.

It is truly unfortunate to see the greed of these corporations pry on the emotions of the public to garner profits. It is one thing to clickbait but to blatantly lie and create fake news is a new low. Especially with a lack of other reliable sources, it’s hard to verify whether or not what you’re reading is even factual. I found it interesting to employ the use of machine learning to determine the validity of these articles. I wonder how the AI will tell the differences between satire/clickbait and fake, deceitful news.

It is truly unfortunate to see the greed of these corporations pry on the emotions of the public to garner profits. It is one thing to clickbait but to blatantly lie and create fake news is a new low. Especially with a lack of other reliable sources, it’s hard to verify whether or not what you’re reading is even factual. I found it interesting to employ the use of machine learning to determine the validity of these articles. I wonder how the AI will tell the differences between satire/clickbait and fake, deceitful news.

Fake news can not only mislead the audience but can lead to unnecessary panics and serious consequences. This reminds me of an AI that’s used by many on Line, an online messaging app widely used in Asia. The AI appears in group chats in the form of a user and provides correction and additional information when they detect that links to fake news are being shared in the chat.

This is an interesting study! I wonder how the accuracy can be improved for AI to differentiate fake news from real ones.

Great post! Fake news and misinformation spread online is a massive, worldwide problem. While some cases can simply be annoying and superficial, many incidents of fake and misleading news can have serious, damaging consequences. I am glad that more governments and organizations are putting in AIs and other such technology to try to determine and limit the spread of fake news. The experiment comparing models was really interesting, and you did a really great job at explaining the process and results.

Amazing post! I think this type of software it super important as although we have the worlds knowledge at our fingertips we also have a lot of lies and propaganda. It’s amazing that this type of AI is being put into place especially in third world countries that have been subjected to a lot of corruption form within and a lot of bias. That is the only downside I see other then that as long as this filtering software is in the right hands it’ll only do good.

Hey, great post. I like how governments and people are fighting against misinformation. However, I would have never thought that machine learning would take the frontal role in ensuring that fake news does not decimate its destructiveness upon others. I have heard about the hold that Whatsapp has over others, especially in countries like India and Pakistan. The app was solely responsible for community violence and national attacks. Nonetheless, the very fact that a tool is being developed to combat this shows a future that is much different from the one that would have existed if there was no oversight on misinformation.

Amazing post bro! Fake news and propaganda have caused plenty of problems and misunderstanding among the international community. Just like human interaction, news dissemination and reporting should also based on sincerity. Maliciously leading the direction of public opinion will have a great negative impact on individuals and even the country. Advanced technology like machine learning should be applied more on these type of problems.

This is a very interesting post. Fake news has been a real problem from a lot of time. It leads people’s opinions on to wrong paths. AI and Technology in general have advanced so much but it is only useful when people use it for the right propoganda.

I liked this post a lot since it went a step beyond what was originally asked of us for blog posts and conducted an analysis on the available data. Your findings are extremely interesting. While I’m not very familiar with natural-language processing, I’ve heard about transformer-based NLP models before and I’m curious whether you predict they would retain their advantage in detecting fake news in other languages as well. I’m also curious about how your models were fed data from these news articles. For example, had your models included the names of the newspapers among the words scraped from their articles, a BERT-based model would have been susceptible to filling in the blanks around the title of the newspaper and determining that it was fake news that way (i.e., by knowing that it came from a known fake news vendor), rather than by analyzing the text of a given article.

It’s quite remarkable the way that society evolves according to new technology. Fake news has been around since the beginning of time, and yet with more resources than ever to fact-check at our fingertips, it’s still so easy to get lost. It’s especially difficult when corporations and governments use this to profit, whether politically or financially.

Very cool post! I find it hilarious that people were so interested in fake news that viewership of many medias hit highs never seen before, and I’m not surprised that companies saw this and realized they could increase profits by continuing to post fake news. It is rather unfortunate that this is the way things are, but the AI designed to find fake news is really interesting. I think that it could be really useful all over the world for all sorts of medias. Great post!

Misinformation and the fight against it has been something I have recently been looking into. Specifically on major news outlets such as Fox, CNN, CBC. One thing that critics point out of these outlets is that their rendition of a scene or scenario will be riddled with half-truths. Half-truths are a form of lying and manipulation where you gain trust or legitimacy in what you are saying by first giving out a truth that leads to some lie or speculation that isn’t stated as such. It will be interesting to see how the Bangla team will address this and train the algorithm to detect it.

It’s impressive how creative and clickbaity some news articles are becoming, often even having a title that is somehow a half truth but with tons of context left out. Incidents such as identity theft however and investing in fake businesses are much more serious than just falling for a clickbait title. The idea of using AI to detect fake news is very intriguing, I can already see concerns though for when its not 100% accurate as these days detecting what’s real and what’s not can be difficult.

Great post! I’m glad to see there are more developments and new methods to combat misinformation. At the same time, it’s unfortunate some are intentionally spreading misinformation to spread their own personal agenda or gain some profits or clicks. Using AI to detect misinformation is an interesting and a possibly promising concept!

A really interesting post. I’m curious, as I think a couple of posters above have already mentioned, how are/should these sorts of algorithms be trained to deal with the idea of misleading vs true information, and how can the creation of the algorithm itself be insulated against the issues of bias? After all, information can be both true, but also misleading depending on the context, and I wonder if fake-news algorithms might begin to struggle and see dips in their accuracy as it becomes more and more difficult for a determination to be conclusively made. Or is there an objective standard that can be used to verify that information is true and not fake so that as the determination gets more difficult, there is something that can be turned to to review the accuracy of the algorithm?

All in all, though, this is a super cool project.

I heard for the first time that a detector can detect news websites, but it is a good tool for Bangla. In order to obtain traffic and benefit from it, the company publishes false and misleading news. It not only brings panic to society but also maliciously sells users’ information. This behaviour leads to the authenticity of the news cannot be truly distinguished, but also infringes on the privacy of users. However, the performance analysis of the model is interesting. Five evaluation indicators show that the performance of the BNnetXtreme detector is useful. It is hoped that Bangla can make rational use of this detector to filter misleading news and maintain users’ personal information.

Great post! It’s great to know that there’s a detector that can detect fake news. Misinformation can cause significant immeasurable damage in the form of civil unrest and in damage to the reputation of these news sources. However, I do think there should be a line drawn between “satirical” and “fake news”. Especially since the intent of something that is “satirical” may be completely different than that of “fake news”. This detector could be instead used by dictatorial regimes to suppress the rights of individuals.

Interesting post. It’s very cool that there is a detector that can detect fake news. I think this is a very helpful thing to have because many people can fall prey to fake news fairly easily. Having this detector will definitely help a lot of people out.

Fake news and those clickbait titles with their never ending paragraphs that don’t provide you with any facts, I hope that someday we can all be free of those senseless wastes of ink. You’ve put the success rate at %91 percent, how would the false positives fair in comparison with this number? What would be the process to increase the success rate? Is it simply a matter of increasing the data supplied for training?