“OpenAI says it has disrupted 20-plus foreign influence networks in past year”[4]

It was about time when analysts and researchers will find AI-generated malware in the wild. Last month, a report published by HP wolf Security[5] highlighted the malicious code has been detected in the recent targeted email campaigns to French users to deliver AsyncRAT written by genAI. AI has been used by many cybercriminals to generate realistic emails for phishing, but governments and researchers have always warned of sophisticated attacks using AI tools even with the highest level of protection and policies implemented by vendors.

What is AsyncRAT?

Released in 2019, AsyncRAT is a Remote Access Trojan(RAT), designed as a open source remote administration tool by its creators as per their official GitHub page[2]. AsyncRAT is almost exclusively used by cybercriminals to as a medium to load malware, remote code execution, steal credentials, keystroke logging, stealth screen recording etc. [2]The product has botnet capabilities with a command center as C2 server located remotely from where it can be managed to control affected devices.

This is has become a very powerful tool for cybercriminals in materializing their intents. Since its release it has only become popular and widely used and leveraged by threat actors in attacks against multitude of entities such as airports, hospitals, government offices etc.

Findings of HP Wolf Security

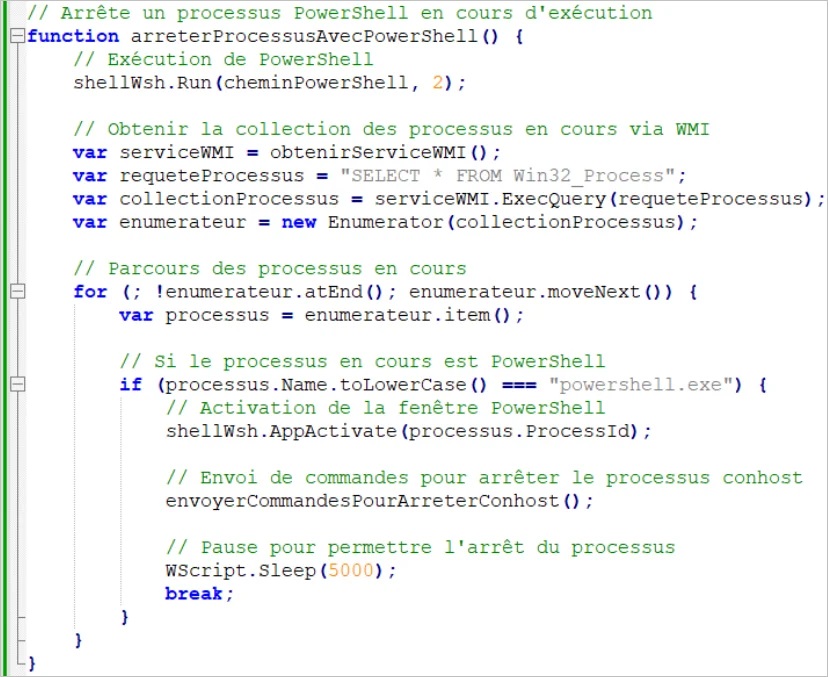

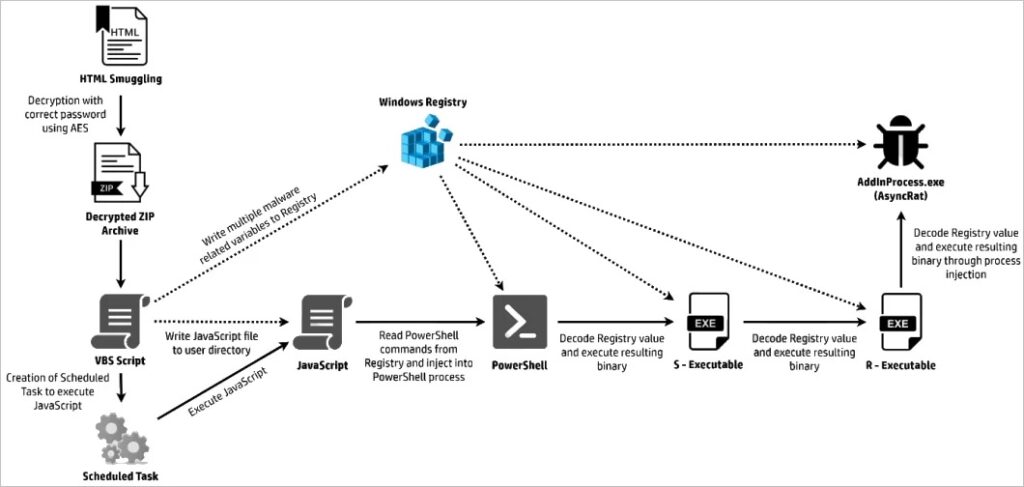

Researchers at HP Wolf Security[5] intercepted the campaign emails sent out in early June, it had a malware in the form of an encrypted zip attachment. Upon brute forcing the file they were able to discover VBScript and JavaScript codes written by AI. The script commenting, style of writing, and the choice of using native language for function names and variable allowed the researchers to conclude its AI generated.

Image 1: The VBScript Code

The file then downloads and executes AsyncRAT which set up encrypted remote connection to the controller downloads the malware which allows the remote controller to log keystrokes[1]. Though AsyncRAT can deliver many other payloads remotely once setup.

Image 2: Complete Infection Chain

Open AI findings [4]

After the HP Wolf Security findings and a April 2024 findings reported by Proof point on a PowerShell loader suspected of written by AI to deliver TA547(aka “Scully Spider”), it was apparent that that there has been significant rise in cybercriminals use of generative AI.. Hence OpenAI recently released they have “disrupted over 20 malicious cyber operations trying to abuse ChatGPT”. The data released in the report is only for 2024. They have highlighted abuse of ChatGPT by threat actors in Iran and China using the power of it to enhance their operating capabilities.

Role of AI in cybercrime

More and more non-technical malicious actors are finding it easier to use AI generated codes to design malwares and perform attacks. AI is being used lowering the bar for threat space and the capability to implement a large-scale attack with limited knowledge.[1] Its apparent that more and more threat actors are in the business lured by quick massive money with limited knowledge and understanding of the capabilities. AI is empowering such entities and groups to thrive and achieve some success.

Researchers are now wondering the effect it may have on the highly specialized cybercriminals who would be able to create dangerous applications, more sophisticated attacks using AI tools. We are only seeing drops of the massive storm that are about to landfall as AI matures. With the pace AI has evolved in the last few years it’s a matter time till we see multifold increase in cybercriminal activity.

Artificial Intelligence application in threat prevention

I understand this is a massive topic and wide scope, as the power of AI has only been nurtured in surface, we are still unaware of the true potential. Its interesting to see this technology evolve rapidly as more and more business adapt to it. Its mind boggling to see the rise of AI in such short period of time and unearth its limitless potential. As we are witnessing to some extent how this can disrupt if abused by bad actors, organizations are also harnessing its potential to protect, secure their perimeter.

AI integration in penetration testing has been evolving rapidly. [3]Penetration testing can be tedious and time consuming and it takes significant human resources and knowledge to perform Pen testing. Hopefully this allows organizations to be better prepared against adversaries and impending attacks.

As more and more cybersecurity tools are powered by AI, efficient threat modeling can be achieved. Cybersecurity professionals will be empowered to detect and mitigate risks faster. At the bottom of it, its important to keep our security tools updated and never download attachments from unknown sources and be diligent in doublechecking attachments from known sources, because it could be a matter of one wrong click.

References:

1.Author: BILL TOULAS LINK: https://www.bleepingcomputer.com/news/security/hackers-deploy-ai-written-malware-in-targeted-attacks/?&web_view=true

2.AUTHOR: N/A LINK:https://www.blackberry.com/us/en/solutions/endpoint-security/ransomware-protection/asyncrat#how-to-prevent

3.Author: Jason Firch https://purplesec.us/learn/ai-penetration-testing/

4.Author : Bill Toulas https://www.bleepingcomputer.com/news/security/openai-confirms-threat-actors-use-chatgpt-to-write-malware/

5. Author: HP Wolf Security Report, Link: https://www.hp.com/us-en/newsroom/press-releases/2024/ai-generate-malware.html

Image1, Link: https://www.bleepstatic.com/images/news/u/1220909/2024/AI/04/comments.jpg

Image2, Link: https://www.bleepstatic.com/images/news/u/1220909/2024/AI/04/diagram.jpg

Fantastic job, Kaushik! Your study does show how cybersecurity risks are changing, which is quite interesting. The detection of AI-generated malicious code in targeted email campaigns marks a significant milestone in the cybersecurity world. Threat actors are aggressively using AI techniques to produce malware that is more complex and challenging to detect, as evidenced by this. The development of AI-written malware code raises the stakes to a new level, even if the use of AI to create realistic phishing emails was already suspected.

This is a very alarming trend, using AI for any kind of attack makes it easier for even non-technical individuals to carry out sophisticated cyberattacks. This shift really marks a significant evolution in the threat landscape. It seems that as technology advances, we can also expect even more complex and large-scale attacks, highlighting the importance for organizations to invest in every security measures and user education possible. Navigating this dynamic landscape will require a proactive approach that balances technological innovation with ongoing training to effectively mitigate emerging risks.

Nice Job! Kaushik, Cybercriminals use generative AI to create malware like AsyncRAT, making attacks more accessible and sophisticated. AI automates phishing and malware creation, increasing cybercrime risks.

Relevant post, Kaushik! It raises an important point about the double-edged sword of automation and new inventions. While AI can be used to create tools that limit the generation of malicious code, strong cybersecurity teams are still crucial, especially for sensitive companies. This highlights the need for standards and regulations governing the use of generative AI in the digital age. I think it’s high time for us to enhance our knowledge of generative AI along with MISP.

Nice work Kaushik! The rapid evolution of AI in cybersecurity is both exciting and critical for organizations. As AI enhances threat modeling and detection capabilities, organizations can proactively identify vulnerabilities and respond to threats faster than ever before. However, it’s essential to consider the implications of emerging technologies like quantum computing! Your point about maintaining vigilance is crucial too. Human error remains a significant factor in cybersecurity breaches, so fostering a culture of security awareness among all employees is essential.

Thanks for the insightful post! It is no news that the use of AI has been on the rise, and knowing that it is being used to deliver malware is quite eye-opening. The targets mentioned in the post are definitely high risk considering they house an enormous amount of users’ Personally Identifiable Information (PII). Although AI is extremely useful in cyber defence, it is unfortunate that bad actors can leverage it in their malicious activities. I believe this should be a wake-up call for organizations to enhance their AI risk and governance practices. Thanks for the insight!

Great post, Kaushik! This is another scary development in terms of the uses of AI. Having AI that is capable of writing code definitely is worrisome for software developer’s job security, but now knowing it can be used to create effective attacks is worrisome for everyone. I hope that AI could also be used to mitigate this malware problem. Perhaps it could be used to scan code bases and look for potential vulnerabilities, as well as suggest code that is as secure as possible. It could also help improve spam filtering and detecting malicious attachments, as current implementations in most email systems often let many malicious emails through.

It’s wild to see how easily non-tech folks can use AI to create malware and launch attacks. As AI gets smarter, we’re likely in for a storm of new threats. Organizations need to step up their defenses and stay aware of these risks. Let’s hope we can turn the tide and use AI to boost security instead!

Great Post! The way AI is being used by cyber criminals in designing malware and phishing campaigns is a clear demonstration of the fact that technology can be a double edged sword. Although AI can be an amazing help in fighting cybercrime, it can also be used by bad guys to commit crimes. There are many scary things about AI and even more about AI code which it allows non technical people to be effective in cybercrime.

This is very enlightening Kaushik. These AI are created sophisticatedly in the name of being able to handle every manner of manipulation or computation it is presented with, in return, they’re now weaponized by bad actors and used used to create complex malware and viruses with very little effort from cybercriminals. As much as AI is intended to make life and work easier, this is another aspect of using AI that needs serious attention. Various topics and debates have questioned how disadvantageous AI is likely to be as opposed to its intended purpose. AI is slowly killing productivity and creativity in humans. We have gotten overly reliant on AI so much that some conspiracies believe we’re headed towards doom. My question is how do we reduce our reliance on AI and keep it under control?

Interesting reading, Kaushik. I would also emphasize on using “Code-Signing” [1] to limit the script execution to authentic signed applications, (Anderson, 2018). With code-signing, the organization can limit the execution of these malicious codes ensuring only well-known and trusted publishers can be executed and not relying on the end-user maturity to reject trojans and ransomware web links. The challenge though for code-signing is being a tedious process to clear out thousands of codes and script singing them digitally which is a cumbersome and lengthy process. Nevertheless, code-signing is the safety net to overcome the AI well-written codes which as you explained it is just a flare of massive storm.

[1] Anderson, M. E. (2018). Certificates, code signing and digital signatures. 10643, 1064307-1064307–6. https://doi.org/10.1117/12.2302618