Imagine you’re sending a secret message, and suddenly someone decides to check what you’re writing. Strange, right? That’s essentially what’s unfolding in Europe right now. The EU is debating a controversial new plan, known as the “Chat Control” law, which would require messaging apps—including privacy-focused services like WhatsApp, Signal, and iMessage—to scan every user’s chats for harmful content. (TechRadar, 2025). This could turn your phone into a surveillance tool, even when you believe your conversation is private. Read the EU proposal here.

Figure 1: Symbolizing surveillance within private chats. (Generated by Copilot)

At first, the goal sounds noble: stop the spread of child sexual abuse material (CSAM). But here’s the issue—the approach could weaken one of the strongest privacy protections we have: end-to-end encryption. And that’s where the debate heats up.

The EU wants to protect children online. Who could argue against that? The internet is a chaotic, often unpredictable space, and predators do exploit platforms. Governments have a duty to safeguard vulnerable users, and the urge to act is understandable. If all you consider is the goal—protecting children—the proposal looks like common sense.

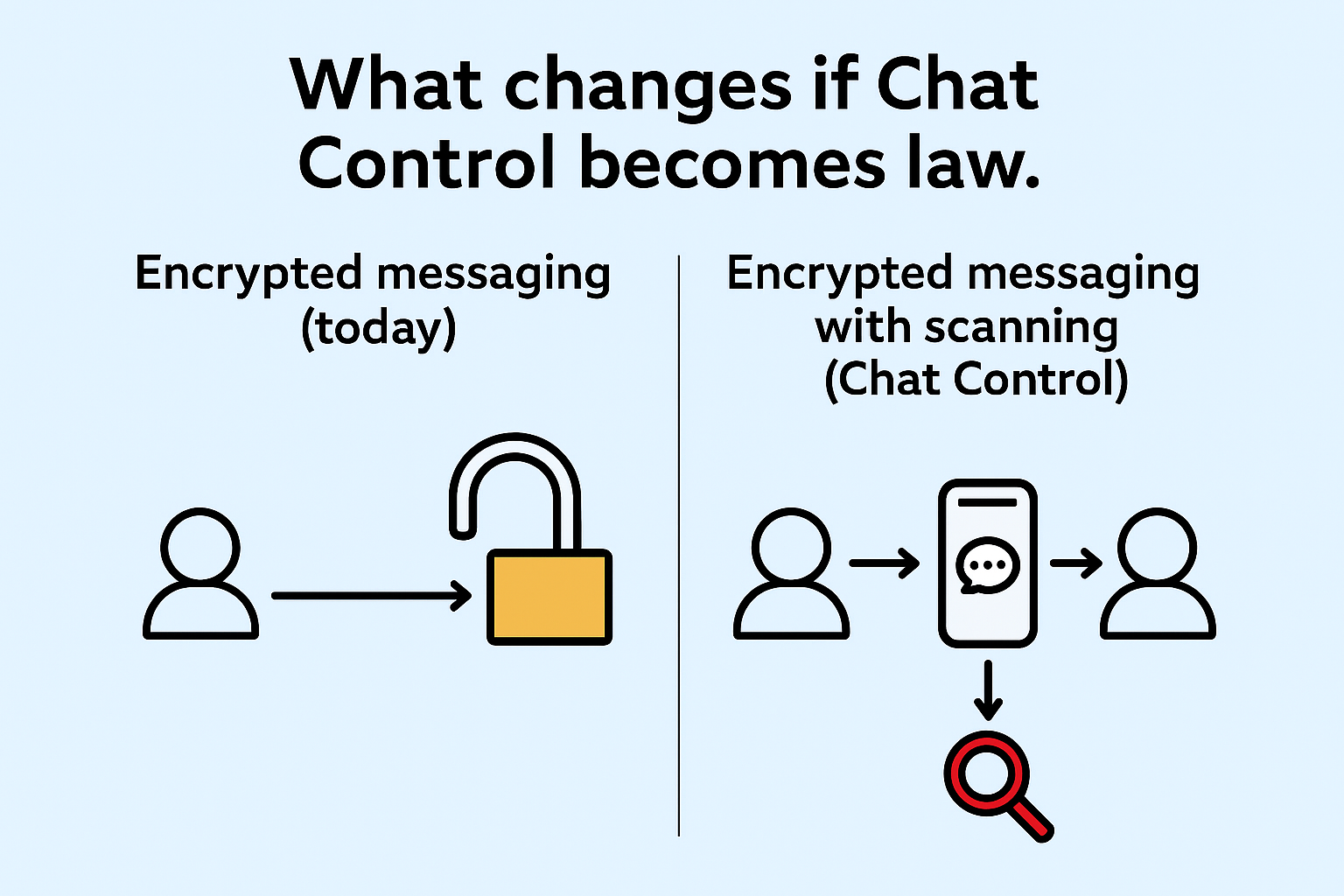

But here’s the catch: the devil is in the details. To scan encrypted chats, companies would have to build surveillance systems directly into the apps. (Euronews, 2024). In effect, your phone would become a mini-police officer, checking every photo, video, and message before they leave your device. Imagine a security guard stationed in your group chat, reading everything before you hit send.

Over 500 cryptographers, security experts, and tech researchers have signed an open letter warning that this move is dangerous (Tuta Mail, 2025). Why? Because encryption is like the lock on your front door. End-to-end encryption means only you and your intended recipient hold the key. If you add a “backdoor” for authorities, you’ve also left the door cracked open for hackers, cybercriminals, and oppressive governments. (TechRadar, 2025)

It’s like telling your neighbor, “I’ll leave my door unlocked so the police can stop by whenever they want.” Sounds noble, right? Until one day you come home to find burglars, a raccoon, and maybe even your neighbor’s odd cousin lounging in your living room. Weakening encryption isn’t selective—it leaves everyone exposed.

Figure 2: Illustrating the insecurity of encryption when a spare key exists. (Generated by Copilot)

This proposal has also raised plenty of eyebrow-raising questions:

- Can an algorithm really tell the difference between a cat meme and harmful content?

- Do you want an app deciding whether your inside joke with friends is “suspicious”?

- How would you explain to your grandma that her lasagna recipe photo was flagged as “potentially illegal”?

Automated scanning isn’t perfect. False positives happen all the time. What starts as a well-meaning effort to protect children could easily spiral into a mess where harmless content gets flagged, private chats are misinterpreted, and people lose trust in the apps they rely on (Electronic Frontier Foundation, 2022).

This debate goes beyond one law. It’s about whether we’re willing to trade private, secure communication for safety. Once scanning encrypted chats becomes normalized, it’s easier to justify expanding surveillance further. (TechRadar, 2025). Today it’s CSAM; tomorrow it could be dissent, protests, or criticism of those in power.

Tech companies are caught in the middle. If forced to comply, they’ll need to redesign apps for millions of users worldwide. That raises tough questions: Will people switch to apps outside EU jurisdiction? Will developers fight back in court? Or will privacy itself be sacrificed? If the EU adopts Chat Control, other governments may follow. That could lead to a world where private conversations are a luxury, not a right.

Figure 3: Comparing encrypted messaging today’s encrypted messaging with scanning under Chat Control. (Generated by Copilot)

At its core, this debate is about privacy versus security. It’s an old dilemma, but the stakes are higher in the digital era. Strong encryption has protected activists in authoritarian states, safeguarded journalists from retaliation, and shielded everyday people from cybercrime. (United Nation 2015) Weakening it—even for a noble cause—risks dismantling those protections for everyone.

Yes, protecting children is critical. But does the solution really need to involve mass surveillance of chats? Can we target criminals without listening to millions of innocent conversations? These are the tough questions lawmakers must focus on before rushing laws that could reshape how online communication works.

- Should governments have the authority to scan private, encrypted conversations?

- Can tech companies realistically balance safety and privacy with measures like this?

- Would you keep using apps like WhatsApp or Signal if you knew your messages were screened before being delivered?

I’d love to hear your perspective. The future of private communication may hinge on where we draw the line today. And if you’ve got a funny take or wild analogy, drop it too—the comments are open.

References

TechRadar. (2025, September 9). Chat Control: Germany joins the opposition against mandatory scanning of private chats in the name of encryption. https://www.techradar.com/computing/cyber-security/chat-control-germany-joins-the-opposition-against-mandatory-scanning-of-private-chats-in-the-name-of-encryption

Tuta Mail. (2025, March 19). We will not stand by while the EU destroys encryption: Tuta Mail ready to sue the EU over Chat Control. https://tuta.com/blog/posts/we-will-not-stand-by-while-the-eu-destroys-encryption

Electronic Frontier Foundation. (2022, May 11). The EU’s draft law on CSAM scanning: A dangerous expansion of government surveillance. https://www.eff.org/deeplinks/2022/05/eus-draft-law-csam-scanning-dangerous-expansion-government-surveillance

Euronews. (2024, June 3). EU chat control law proposes scanning your messages — even encrypted ones. https://www.euronews.com/next/2024/06/03/eu-chat-control-law-proposes-scanning-your-messages-even-encrypted-ones

United Nations. (2015, May 26). Online anonymity, encryption protect rights, UN experts tell governments. https://www.ohchr.org/en/press-releases/2015/05/online-anonymity-encryption-protect-rights-un-experts-tell-governments

Truly an insightful post, Pradip! I especially appreciated how you captured the “damned if you do, damned if you don’t” nature of this post. And you’re very correct; chat control (while intended with a noble and favorable goal), could prove to be the very menace that would take control away from individuals. A similar initiative is BULLRUN, an NSA-led highly classified project that seeks to allow authorized individuals access to backdoors for chat communication, essentially opening up to end-to-end encryption.

Additionally, I enjoyed the way you talked about how the minute the EU gains access to encrypted chats, they can take advantage of that to provide a myriad of reasons why they need more access to people’s privacy(ies). It’s a messy can of worms that sadly is quite complicated to resolve.

Honestly, I’m on the side of individuals having a right to their privacy. Rather than opening up private chats to the government, a totally unhinged suggestion from me, would be to include clauses in privacy and protection laws that directs tech companies to use blockchain technology to store chats. This way, we’re able to rely on its (blockchain) immutable feature to retrieve information when needed (say for a criminal investigation).

The point is that for every protection law that comes up, there’s a dark side that can be taken advantage of. Adversaries would mix in with the defending crowd to exploit the very thing protecting individuals for their own gain, and in everything we do, securing privacy should be at the forefront.

It’s quite unfortunate that we’re not able to secure our own privacy without a governmental body trying to gain control in some way or the other, but sometimes, an adversary is not some weird man-in-the-middle; they are the very system/bodies meant to protect us and have our best interests at heart.

Thank you!

There will always be a big debate between these two contrasting viewpoints. Should we give access to our personal information so that the authorities can protect us, or should we keep our personal information private even if this is to the detriment of society? I believe that there must be a middle ground that allows the authorities to protect us while not infringing on our private lives.

I found your analogy of leaving your door unlocked to raccoons and others quite compelling. It really highlights the slippery slope of privacy.

Very eye opening, in my opinion this situation is pretty similar to India’s mandate on communication platforms which uses end to end encryption which forces them to introduce “traceability”. This boils down to the age old dilemma of privacy vs security trade-off despite the well intentions. My two bits to this dilemma is to adopt a targeted approach which does not involve building surveillance systems directly into the application itself rather how @Dami Ogunnupebi pointed out it could target the problem at the policy level.

Both the sides weigh equally heavy in my mind, on one hand the fact that getting access to private communication violates personal freedom, trust in the companies and raises the issue of the false flags and on the other hand it will significantly boost the governments ability to detect criminal activities. However, in my opinion this should not come at the cost of privacy, a client side safety opt-in is one partial middle ground which I could think of for examples having parental controls or AI-powered filters that run on users device which filters out content without reading the message itself.

Great post

This is very similar to what i read recently. This would be a really bad move as everyone will be under strict surveillance venting. Additionally giving it also weakens encryption, it won’t just affect the so called cybercriminals they are trying so hard to fight, it will also expose ordinary people, business and even governments to cyberattacks. If the same backdoor that is also used for scanning CSAM, it will definitely be used by the hackers and cybercriminals. In trying to protect just a group, it will end up making everyone else unsafe.

Caring for children is important, but monitoring everyone’s personal messages is not the right approach. Diminishing encryption allows hackers, criminals, and even governments to take advantage of it. When a backdoor is created, it won’t remain exclusive to the “good guys.” Safety should not require sacrificing our right to private discussions

This has become a very common narrative lately. Any time government tries to push a law that will give them more surveillance power, they will most likely justify it by “protecting children”. This was happening recently with UK demanding a backdoor from Apple’s iCloud (luckily, that one didn’t go through!), or Canadian Bill S-210 (“Protecting Young Persons from Exposure to Pornography Act”), where protection of children is right in the name, but that also allows for a total theoretical surveillance of everyone who wishes to use the Internet. I really hope people will understand that “protection of children” is their parent’s task, not their government’s!

Great post! The impulse here to protect children is really understandable.

The mass client-side scanning seems like the equivalent of using a sledgehammer to crack a nut. When there is a backdoor, it is not just the government that will make use of it. Criminals will eventually find their way around it too. Rolling back strong encryption would only weaken online security for all users and help criminals by making things less safe for everyone.

There’s such a well-established dichotomy between security/protection and the invasion of privacy and this post really touches on that. It is very concerning though, as while this may be put forward as a way to protect the children, it will expose every single person and their information. I would be wary of using those applications if I knew that my conversations were not private. I like the idea in one of the comments above where Dami mentioned the idea for using blockchain technology if applicable. Thank you for sharing.

The goal of protecting children is something everyone can and should support, but scanning private conversations? It crosses a dangerous line. End-to-end encryption was created to keep our information safe, and if a backdoor is created, it’s not just authorities who can use it, cybercriminals and even hostile governments will try to exploit it too. Safety and privacy do not have to be opposites, we should be looking for targeted solutions that address abuse without forcing millions of people to give up their privacy.

Brilliant topic, it’s a debate we all need to pay attention to.