Introduction

In today’s data-driven world, protecting our personal information has never been more critical. No day passes without fear of privacy breaches and unauthorised surveillance confronting us as data theft takes place simultaneously. Privacy-Enhancing Technologies (PETs) enable data protection through a collection of tools that maintain personal data security without impacting its valid use.

The upcoming discussion will explain Privacy-Enhancing Technologies (PETs) by explaining their core concepts and analysing active threats to data privacy in contemporary settings. Whether you’re a privacy-conscious individual, a developer, or someone just curious about the future of data security, you’ll find something valuable here!

[1]

What Are Privacy-Enhancing Technologies (PETs)?

Privacy-Enhancing Technologies represent tools and methodologies that protect personal data through exposed data reduction and secure processing techniques. The combination of PETs enables data analysis with complete protection of identity and sensitive data from unauthorised access.

Let’s break down some of the most important PETs currently transforming how we protect data.

[1] [3]

1. Homomorphic Encryption (HE)

Under homomorphic encryption the system executes computations on encrypted information while the data stays encrypted throughout the entire process.

- How It Works: Mathematical operations are performed directly on ciphertext, and the result, when decrypted, matches the result of operations performed on plaintext.

- Applications: Secure data analysis in cloud computing, privacy-preserving machine learning, and encrypted search engines.

- Real-World Example: Healthcare providers can perform medical data analysis on encrypted patient records without ever accessing the underlying data.

[1][4][5]

2. Differential Privacy

Achieving differential privacy involves controlled noise addition to maintain accurate insights without compromising privacy standards for individual participants. The effective analysis of extensive datasets depends on differential privacy because it helps researchers avoid privacy violations.

- How It Works: A privacy parameter (ε) controls the amount of noise added. Smaller ε values provide stronger privacy guarantees but reduce data accuracy.

- Applications: Data analytics for health research, census data release, and market analysis.

- Real-World Example: Apple uses differential privacy to get user data from iPhones to see what people do without invading any person’s privacy.

[1][2][6]

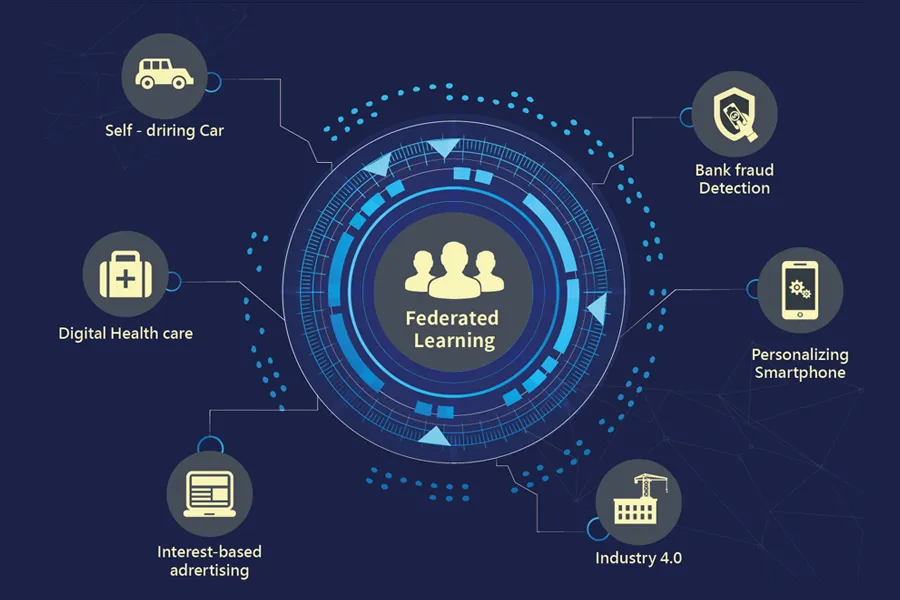

3. Federated Learning

Imagine a machine learning model that lets you train it without exposing your data. Federated learning makes this possible. This technology enables numerous actors to work together to develop a digital intelligence model in a de-identified fashion.

- How It Works: Enables model training across multiple devices without sharing raw data. Local devices train the model and send only updates to a central server, ensuring privacy while improving model accuracy.

- Real-World Example: Google used federated learning in mobile devices, and as a result, your data is processed on device locally instead of sending it to centralized servers, thus preserving privacy.

[1][7][16]

4. Secure Multi-Party Computation (SMPC)

SMPC allows multiple parties to jointly compute a function over their inputs while keeping those inputs private.

- How It Works: Each party encrypts their input, and computations are performed on the encrypted data. The final result is revealed without exposing individual inputs.

- Applications: Collaborative fraud detection, privacy-preserving auctions, and joint financial analysis.

- Example: Banks can use SMPC to detect money laundering patterns without sharing customer transaction data.

[1][8]

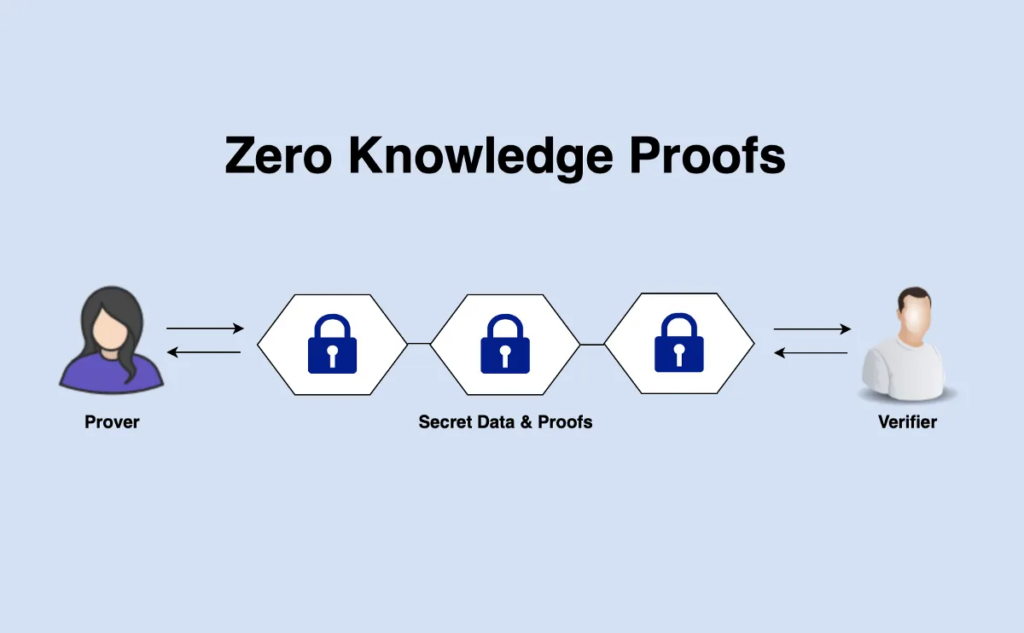

5. Zero-Knowledge Proofs (ZKPs)

ZKPs allow one party to prove to another that a statement is true without revealing any additional information. This technology is a game-changer for ensuring privacy in digital transactions.

- How It Works: The prover convinces the verifier of the truth of a statement using cryptographic protocols, without disclosing the underlying data.

- Applications: Blockchain transactions, identity verification, and secure authentication.

- Example: Zcash uses ZKPs to enable private cryptocurrency transactions.

[1][9][10]

Examples of Threats to PETs You Need to Know

1. Model Extraction Attacks on Federated Learning

Federated learning allows multiple parties to collaboratively train machine learning models without sharing raw data. However, a new model extraction attack emerged, where an attacker exploits the model updates shared during federated learning to reconstruct the training data.

- Attack Vector: The attacker participates in the federated learning process and uses gradient inversion techniques to reverse-engineer the model updates, extracting sensitive training data.

Mitigation:

- Apply differential privacy to model updates to add noise and prevent inversion.

- Use secure aggregation protocols to obscure individual updates.

[11]

2. Data Poisoning Attacks on Differential Privacy

Differential privacy relies on adding noise to data to protect individual privacy. Data poisoning attack was discovered, where malicious actors inject carefully crafted data into the dataset to manipulate the noise distribution.

- Attack Vector: The attacker injects outliers or biased samples that will increase the nonlinearity of the noise added from differential privacy mechanisms and lowers its effect.

Mitigation:

- Use robust statistical methods to detect and filter out poisoned data.

- Implement multi-layered privacy mechanisms to enhance resilience.

[12][13]

3. Zero-Knowledge Proof Vulnerabilities in Blockchain

A comprehensive security audit of the o1js library, a TypeScript framework for zk-SNARKs and zkApps, identified three critical vulnerabilities.

- Attack Vector: The vulnerabilities stemmed from flaws in the implementation of zero-knowledge proofs within the o1js library.

- Impact: These issues posed significant risks to applications utilizing o1js, potentially allowing unauthorized access or manipulation of sensitive data.

Mitigation:

Developers are advised to promptly update to the latest versions of the o1js library and conduct thorough security audits of their ZKP implementations.

[9][15]

Challenges and Emerging Threats to PETs

While Privacy-Enhancing Technologies (PETs) offer promising solutions, several challenges remain:

- Evolving Cyber Threats: Attacks such as model extraction and data poisoning present continuous risks.

- Implementation Complexity: Many PETs are complex to implement, requiring ongoing research to improve scalability and usability.

- Privacy vs. Utility: Balancing privacy protection with the need for accurate and useful data can be difficult in some applications.

- Vulnerabilities in Systems: Issues like zero-knowledge proof vulnerabilities, which can allow unauthorized access to data, need to be mitigated.

[1]

Conclusion

The full potential of Privacy-Enhancing Technologies (PETs) requires multiple efforts at the same time to achieve. Research efforts should focus on two main areas: performance enhancement alongside scalability optimisation as well as simplifying technological deployment and public education about PETs adoption.

Addressing conflicts between privacy and utility, and finding the right balance between anonymity and accountability, requires continuous innovation and ethical commitment. As emerging threats, such as AI-powered security threats alongside model extraction vulnerabilities require organisations to sustain their innovation while staying vigilant.

At the end of the day, the future of privacy depends on how well we adapt to new tech and emerging risks. By staying informed, using privacy tools, and working with experts, we can make sure our data stays safe for years to come.

References

[1] Information Commissioner’s Office. (2022, September). Chapter 5: Privacy-enhancing technologies (PETs): Draft anonymisation, pseudonymisation and privacy enhancing technologies guidance. https://ico.org.uk/media/about-the-ico/consultations/4021464/chapter-5-anonymisation-pets.pdf

[2] Dulanga, C. (2021, May 10). What are the 3 variants of differential privacy? Medium. https://chameeradulanga.medium.com/what-are-the-3-variants-of-differential-privacy-d65f780f43b8

[3] Le Callonnec, S. (2023, January 26). Introduction to privacy enhancing technologies (PETs). Medium. https://developer.mastercard.com/blog/introduction-to-privacy-enhancing-technologies/

[4] Ghosh, A. (2020, December 29). What homomorphic encryption can do. Customize Windows. https://thecustomizewindows.com/2020/12/what-homomorphic-encryption-can-do/

[5] Vengadapurvaja, A. M., Nisha, G., Aarthy, R., & Sasikaladevi, N. (2017). An efficient homomorphic medical image encryption algorithm for cloud storage security. Procedia Computer Science, 115, 643-650. https://doi.org/10.1016/j.procs.2017.09.150

[6] Achar, Sandesh. (2018). Data Privacy-Preservation: A Method of Machine Learning. ABC Journal of Advanced Research. 7. 123-129. 10.18034/abcjar.v7i2.654.

[7] Cloud Hacks. (2024, March 1). Federated learning: A paradigm shift in data privacy and model training. Medium. https://medium.com/@cloudhacks_/federated-learning-a-paradigm-shift-in-data-privacy-and-model-training-a41519c5fd7e

[8] Dodiya, K., Radadia, S., & Parikh, D. (2024). Differential privacy techniques in machine learning for enhanced privacy preservation. Journal of Emerging Technologies and Innovative Research, 11, 148. https://doi.org/10.0208/jetir.2024456892

[9] Lavrenov, D. (2019, January 25). Using zero-knowledge proof, a blockchain transaction can be verified while maintaining user anonymity. Altoros. https://www.altoros.com/blog/zero-knowledge-proof-improving-privacy-for-a-blockchain/

[10] Nnam, D. (2022, August 20). Beginner’s guide to understanding zero-knowledge proofs. Medium. https://medium.com/@darlingtonnnam/beginners-guide-to-understanding-zero-knowledge-proofs-cadc4e2c23a8

[11] Liu, P., Xu, X. & Wang, W. Threats, attacks and defenses to federated learning: issues, taxonomy and perspectives. Cybersecurity 5, 4 (2022). https://doi.org/10.1186/s42400-021-00105-6

[12] Lyons-Cunha, J. (2024, November 19). What is data poisoning? Built In. https://builtin.com/artificial-intelligence/data-poisoning#:~:text=Data%20poisoning%20occurs%20when%20bad,significantly%20compromise%20the%20model’s%20integrity

[13] Cao, X., Jia, J., & Gong, N. Z. (2021). Data poisoning attacks to local differential privacy protocols. USENIX Security Symposium. https://www.usenix.org/system/files/sec21fall-cao.pdf

[14] origome, H., Kikuchi, H., Fujita, M., & Yu, C.-M. (2024). Robust estimation method against poisoning attacks for key-value data with local differential privacy. Applied Sciences, 14(14), 6368. https://doi.org/10.3390/app14146368

[15] Veridise. (2024, February 14). Highlights from the Veridise O1JS v1 audit: Three zero-knowledge security bugs explained. Medium. https://medium.com/veridise/highlights-from-the-veridise-o1js-v1-audit-three-zero-knowledge-security-bugs-explained-2f5708f13681

An in-depth and well-structured analysis of Privacy-Enhancing Technologies (PETs) offers clear explanations of key methods like homomorphic encryption and zero-knowledge proofs. The discussion on emerging threats adds valuable insight, highlighting the need for continuous research and security improvements. Great work!

Great post Firas! This presentation provided an excellent in-depth exploration of Privacy-Enhancing Technologies (PETs). You excellently transformed complex topics into clear and comprehensible explanations. The presentation successfully linked PETs with practical applications in healthcare and blockchain which I found particularly engaging. These technologies will revolutionize data privacy in the future while enabling organizations to maintain their ability to extract data insights. Your evaluation of Zero-Knowledge Proof (ZKP) vulnerabilities provided remarkable insights. The o1js library case demonstrates that state-of-the-art privacy solutions can develop security issues when their implementation is incorrect.