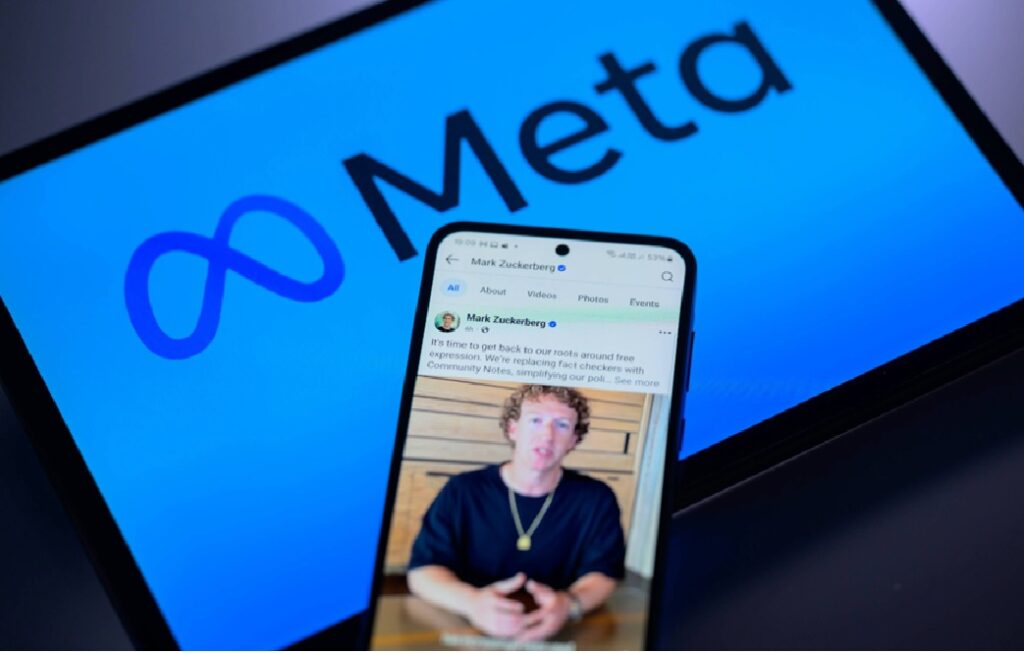

Meanwhile, Meta has completely dropped its fact-checking program. The latest update regarding moderation policies and practices from the CEO Mark Zuckerberg dated January 07,2025, highlighted a new direction credited by the company to free speech[1].

Critics say the move by Zuckerberg to “get back to our roots around free expression” could open the door to more disinformation, scams, and fraud. Anytime you relax controls, fraud goes up. With little government regulatory oversight, the decision does little to solve a long-standing problem: the lack of accountability by social media platforms. More important, Congress grants social media exemptions to content placed on their websites through Section 230 of the Communications Decency Act, dating back to the 1996 version of the Internet. These are sadly very much uglier places today than when pig butchering, money mulling, crypto scams, disinformation, and hate speech were simply some of many additions two plus decades later.

In the meantime, during the last years, Facebook also had its share of scandals. On the list of problems which Facebook has faced, the latest goes to “Data Breach” [2]. More than 530 million users of Facebook had their personal data leak in online forums in 2021, although the data captured was collected back in 2019. More than several security vulnerabilities and data breaches in Facebook were reported quite frequently since 2010. Now, considering the cybersecurity capacity through 4 functions under the NIST Framework, namely Identify, Protect, Detect, Respond, we are able to find out the missing control in the world’s biggest social media outlet, Facebook like the following.

The fact that Facebook failed to comply with the GDPR and had to face the result of 530 million users’ data[3] being posted publicly really points to a critical weakness in their legal awareness. This can further expose Facebook to substantial fines and severe reputational damage. In order to reduce these risks, Facebook needs to engage local legal counsel, continually monitor regulatory changes, and document internal policies and procedures. There should be continued monitoring and correction of non-compliance through collaboration between the legal and compliance departments.

Facebook does not have proper mechanisms to control users’ and its internal data against unauthorized viewing or modification by other parties. The company has poor training for its developers on security measures related to data storage; for instance, the storage of 540 million records of its users on a public cloud server[4]. In the wake of this, Facebook is supposed to make its access control more stringent; for instance, monitoring and frequent audits of various accounts that may be granted permission. Furthermore, all employees need comprehensive cybersecurity training on data security and the consequences of breaches. This training should be reinforced through regular sessions to instill a strong security culture.

Because Facebook does not continuously monitor its network, vulnerabilities may remain undetected, like the glitch that recently exposed private posts of 14 million users[5]. This makes the risk of vulnerabilities escalating and affecting the whole system, thereby disrupting operations. To avoid this, Facebook needs to conduct continuous vulnerability testing, including internal stress tests and a robust continuity plan. A dedicated monitoring system is necessary in the identification of vulnerabilities and recording them for prompt fixing by experts.

Facebook’s continued failure to act on known vulnerabilities, from 2007 until today, reflects a profound breakdown in incident containment [6]. The more this pattern persists, the more cyber vulnerabilities will pile up along with their risks, and mitigation efforts become far more difficult later on. Consequently, user trust will decline as they see Facebook as untrustworthy. For this, it is necessary for Facebook to exercise strong monitoring and analysis in finding out the vulnerabilities, classifying them, and following up on focused teams or automate in its remediation, keeping adequate records to prevent recurrence.

Offloading third-party fact-checkers and weakening its hateful content guidelines are two moves by Meta that raise significant brand safety red flags and are likely only to exacerbate existing criticisms. The impact on advertisers remains to be seen, but the potential for more harmful content could drive users away, accelerating social media fragmentation further. However, Meta says it’s committed to advertisers, providing brand safety tools to help them navigate the changing landscape. The engagement-driven re-prioritization might temporarily spark user activity and boost appeal to advertisers. In the short run, these changes are unlikely to impact marketers in a large way but do suggest the prudence of vigilance regarding users’ responses. Of high importance is the close monitoring of audience response, together with ad performance and engagement trends, using all brand safety tools available.

Conclusion

Without fact-checking, Facebook is a raising ground for misinformation where users are influenced through false stories and conspiracy theories. This then creates cybersecurity risks, such as clicking on links that contain malware or giving away personal information accidentally. Scammers can imitate trusted individuals or organizations more easily, misleading people into phishing attacks or financial fraud. Fake profiles and misleading posts become much more convincing without verification mechanisms that may help to avoid deception.

Moreover, it leads to eroding trust in and the gullibility of users, who will be highly manipulated and become unquestioning in front of any suspicious content. The result would be the proliferation of destructive content: cyberbullying, hate speech, harassment-all go unchecked, making the atmosphere noxious. That keeps users away from the site and makes it an insecure place; hence, this reduces overall credibility.

Furthermore, misinformation about best cybersecurity practices misleads users into higher risks. Where there is no accountability for misinformation, the bad actors will go all out to take advantage of the platform for ill intentions. Giving up fact-checking in general compromises user safety, weakens data privacy protection, and lowers cybersecurity defenses.

References

- https://about.fb.com/news/2025/01/meta-more-speech-fewer-mistakes/

- https://www.fdazar.com/class-action/facebook-data-breach/

- https://www.businessinsider.com/stolen-data-of-533-million-facebook-users-leaked-online-2021-4

- https://firewalltimes.com/facebook-data-breach-timeline/

- https://www.bleepingcomputer.com/news/security/facebook-bug-caused-new-posts-by-14-million-users-to-be-shared-publicly/

- https://www.torys.com/our-latest-thinking/publications/2024/09/federal-court-of-appeal-finds-that-facebook-breached-obligations-under-federal-privacy-law

- Meta Ends Fact-Checking on Facebook and Instagram: What it Means for Advertisers – Basis Technologies

- https://www.shs-conferences.org/articles/shsconf/pdf/2023/04/shsconf_sdmc2022_03013.pdf

- https://basis.com/blog/meta-ends-fact-checking-on-facebook-and-instagram-what-it-means-for-advertisers

- https://hsph.harvard.edu/news/metas-fact-checking-changes-raise-concerns-about-spread-of-science-misinformation/

I absolutely agree Fahim! Facebook is a raising ground for misinformation. Most people even use facebook as their news channel and they believe everything they read on facebook! clicking away their time without considering the risk of clicking unverified links which could be a channel for cybercriminals to have access to their devices. Facebook needs to do more in terms of providing a more secured platform for its users and putting in more checks on who has access to the platform

Great post Fahim, Facebook turns into a breeding ground for misinformation, where false narratives and conspiracy theories sway people. This not only opens up cybersecurity risks like clicking on malicious links or accidentally sharing personal info but also makes it easier for scammers to pose as someone trustworthy, tricking users into phishing scams or financial fraud. Without verification, fake profiles and deceptive posts seem all too real, making it tough to spot the truth from the lies

Meta’s response to privacy and security concerns often feels reactive rather than proactive, only addressing issues after they become major public problems. They seem more focused on maximizing user engagement and advertising revenue, which often takes priority over user safety. Unfortunately, people continue to use Facebook despite its security flaws because it offers an easy way to stay connected with family and friends, serves as a convenient platform for messaging, news, and entertainment, and provides a sense of community through groups and shared interests. Many have developed a habit of using it over the years (guilty of it!), making it a familiar and trusted tool for a variety of activities. However, it is also crucial that Meta starts taking user safety more seriously and implements stronger measures to safeguard their platform.

Nicely written Fahim!! Your analysis beautifully highlights the risks of Meta’s decision to abandon fact-checking, which could fuel disinformation, scams, and cybersecurity threats. . By putting interaction ahead of security, Meta runs the risk of further undermining confidence and allowing malicious actors to take advantage of the platform without restraint. In the absence of strict content filtering, false information and cyberthreats will spread, making consumers more susceptible to deception, manipulation, and privacy violations.

That is a very insightful post, Fahim. It is quite overwhelming to witness the history of the data privacy issues that Facebook has encountered since 2010. Hearing about the recent decision of revoking the fact checker program is perceived with mixed puzzling perceptions. Fact checkers were useful in preventing violence, harassment, and scamming but unfortunately it was used in some countries to suppress freedom of speech especially political stands. Since fact-checkers were human agents who funnel the media posts based on their judgment and how they apply the policy which could be subject to error and bias. There are ways to get around fact-checkers by searching these human accounts and blocking them, especially as they have used patterned named identities. Despite that, I was hesitant to believe this move from Facebook as cultivating freedom, until I came across this articles explaining the real cause of that decision. According to Lisa and Nicholas, the company would fire its fact-checkers and instead rely on its users, with the help of AI, to police Facebook and Instagram for false or misleading posts. “The company will also move its content moderation team from California to Texas, and lift restrictions designed to protect immigrants and LGBTQ+ people from hate speech. (Lisa Marshall & Nicholas Goda, 2025)[1]. Facebook hits two bird with one stone, gaining financial advantage saving cost on hired fact checkers. Additionally, the decision comes with a political position as a damage mitigation plan for the new political changes.

[1] Marshall, L. and Goda, N. (2025).Why Meta fired its fact-checkers and what you can do about it, University of Colorado Boulder, CU Boulder Today. Available at: https://www.colorado.edu/today/2025/01/10/why-meta-fired-its-fact-checkers-and-what-you-can-do-about-it (Accessed: 30 January 2025).

Good writing, Fahim!

Facebook is the name that comes up most often when discussing some of the biggest data breaches in the last decade. The points you raised, such as not having proper mechanisms to protect user and internal data from unauthorized viewing or modification, poor security training for their developers, the lack of continuous network monitoring and potentially undetected vulnerabilities, are very concerning. I used to frequently receive targeted ads based on my conversations which suggests that the Facebook app might also be eavesdropping.

Really insightful! You’ve done a fantastic job connecting the dots between Facebook’s recent decisions and the increasing risks users are facing, Fahim. It’s concerning how removing fact-checking could make it even easier for scammers to target unsuspecting people. This situation really underscores the importance of social media platforms taking greater responsibility for their users’ safety. Moving forward, I hope we see more focus on ensuring these platforms do a better job of protecting users and holding themselves accountable for the content they allow.

Great analysis, Fahim! Your breakdown of Facebook’s cybersecurity gaps using the NIST framework was insightful, especially the lack of continuous monitoring and proper access controls. The history of data leaks, such as the 530 million-user breach, shows that these weaknesses are not new. If Meta is cutting back on fact-checking, it might also be reducing investments in security infrastructure, which could expose more users to scams, phishing attacks, and financial fraud.

I totally agree, Fahim! Facebook has become a hotspot for misinformation, with so many people relying on it for news without questioning its credibility. On top of that, blindly clicking on unverified links makes it easy for cybercriminals to exploit users. Despite its flaws, Facebook remains a go-to for staying connected, messaging, and entertainment, which is why people keep using it—even with its history of data breaches and security lapses. Meta’s approach to privacy often feels like an afterthought, reacting only when things go wrong rather than preventing issues in the first place. The lack of proper monitoring, weak access controls, and even concerns about the app eavesdropping are pretty alarming. And if they’re cutting back on fact-checking, who’s to say they aren’t also reducing security investments? Users deserve a safer platform, and it’s about time Meta stepped up.

Great article Fahim! Meta’s decision to drop fact-checking makes it even harder for organizations, governments, and cybersecurity professionals to control digital threats. Besides increasing scams and false information, this also paves the way for AI driven fraud. Deepfake technology and generative AI are already being used for social engineering attacks, and without fact-checking, Facebook is handing cybercriminals a global launchpad for deception. The bigger issue is the precedent this sets; if Meta can do it, what stops others? Without accountability, misinformation and cyber threats won’t be the exception, but the norm. This is no longer just a Facebook problem, it’s a wake up call for regulators, cybersecurity professionals, and even advertisers who will have to decide whether they want to align with a platform that prioritizes engagement over safety.

Great insight, Fahim! Meta’s failure to comply with GDPR and encrypt user data exposes significant security gaps. Without fact-checking, phishing scams thrive through unchecked fake profiles. The slow response to vulnerabilities only makes things worse. To improve, Meta should enforce end-to-end encryption, conduct mandatory breach simulations, and implement AI-driven scam detection to proactively protect users. Additionally, they should take some steps towards their compliance with personal privacy regulations.

Nice post Fahim!I love the unique perspective on cyber security, which is very interesting. I agree with you and I strongly disagree with Meta’s decision to drop fact-checking and DEI programs. I believe Meta’s decision is based on political factors and not from a perspective to increase a safer and open environment for all.

While it is important to maintain free speech, freedom comes with a level of respect and understand of what is freedom and what is hate. Hate speech is illegal in Canada, and making hate statements in a public place could be punishable by up to two years’ imprisonment., and this is a great example that you cannot use free speech as an excuse. That being said, Meta’s decision is just wrong, and that is not a commitment to free speech and using that as a main reason to drop fact-checking is just a start to disaster.

In your post, you listed the longstanding security issues from facebook and they had a history of security failures, and I do agree with you, they always make changes after something went wrong instead of being proactive. And this decision could lead to some major issues and would be the same again – after damage happened and change will happen. Also, fact-checking is very important in terms of preventing security threats, in these days where the number of security threats are rising, and Meta’s decision is really not a good choice.

The article highlights Facebook’s removal of its fact-checking program, which could increase disinformation, scams, and fraud. It also points out the company’s history of data breaches, like the 530 million users’ data leak, and cybersecurity weaknesses, such as poor access control and lack of continuous vulnerability monitoring. The decision to reduce content moderation may lead to harmful content, eroding trust and platform security. Stronger security practices and oversight are needed to protect users.